Tutorial: Playing Fetch with Spot

NOTE: This tutorial uses some deprecated APIs and will be updated in the future.

In this tutorial, you'll create a fully-functional API example, enabling Spot to autonomously play fetch with you. You'll learn how to:

- Train a machine learning model to detect a dog-toy

- Visualize the results of that model online using Spot's tablet

- Command the robot to pick up the dog-toy with its arm and the manipulation API

- Use an off-the-shelf ML model to detect a person

- Drop the dog-toy two meters away from the person

At the end, you’ll have a fully-functional API example that enables Spot to autonomously play fetch with you.

Required Materials

- Spot robot with an arm

- A dog-toy, hand towel, or any object that:

- has a distinct visual appearance

- is easy to see in the tablet view

- Spot's arm is capable of picking using the Pick Up Object option in the tablet

- A computer running an officially supported Ubuntu version

- CUDA enabled GPU

- A relatively large operational area (5x5 meters)

Contents

Collect and Label Training Data

Overview

We will use transfer learning to quickly and efficiently train a model to find a dog-toy.- Use Spot to collect images of the dog-toy

- Download pre-trained model weights

- Train the top layers of the model to recognize our dog toy

Setting up Python 3 and the Spot SDK

- Follow the [Quickstart guide](../quickstart.md) for the Spot SDK.

- Note: the virtualenv section of that guide is required for this tutorial.

Once you have successfully made Spot move using the API, you’re ready to go.

source my_spot_env/bin/activate # enter your virtualenv

cd ~/spot-sdk/python/examples/hello_spot # or wherever you installed Spot SDK

python3 hello_spot.py 192.168.80.3

Collect Images

Our goal is to collect images of the dog toy in the same environment we want to test in. If you want your model to work in many environments, you'll need to collect data in as many of those as you can.

To collect the data, we'll run the robot with the tablet while downloading images from the API. We want a diverse set of viewpoints, so we'll walk Spot around while taking the images.

Enter your Spot API virtualenv

Replace my_spot_env with the name of the virtualenv that you created using the Spot Quickstart Guide:

source my_spot_env/bin/activate

Install requirements

Install OpenCV's python bindings in your virtualenv:

python3 -m pip install opencv-python==4.5.*Directory Set Up

Make a folder called ~/fetch that we'll put everything into:

mkdir ~/fetch

cd ~/fetch

Copy (or download) the script below into a file called capture_images.py and place it in the ~/fetch folder

Live Image Capture Script

Set up imports for images and the Spot API:

import argparse

import sys

import os

import bosdyn.client

import bosdyn.client.util

from bosdyn.client.image import ImageClient

import cv2

import numpy as np

import time

Then, set up basics:

def main(argv):

parser = argparse.ArgumentParser()

bosdyn.client.util.add_base_arguments(parser)

parser.add_argument('--image-source', help='Get image from source(s)', default='frontleft_fisheye_image')

parser.add_argument('--folder', help='Path to write images to', default='')

options = parser.parse_args(argv)

Here, we add the common API arguments including --username, --password, and hostname. We also add our two arguments, --image-source and --folder, which we'll use to determine what camera to use, and where to put the resulting images.

Next, setup the client to talk to Spot:

# Create robot object with an image client.

sdk = bosdyn.client.create_standard_sdk('image_capture')

robot = sdk.create_robot(options.hostname)

bosdyn.client.util.authenticate(robot)

robot.sync_with_directory()

robot.time_sync.wait_for_sync()

image_client = robot.ensure_client(ImageClient.default_service_name)

This is standard boilerplate for the API, where we're initializing the SDK and logging in. Don't worry about this stuff too much.

Now it's time for the real work:

counter = 0

while True:

# We want to capture from one camera at a time.

# Capture and save images to disk

image_responses = image_client.get_image_from_sources([options.image_source])

dtype = np.uint8

img = np.frombuffer(image_responses[0].shot.image.data, dtype=dtype)

img = cv2.imdecode(img, -1)

# Approximately rotate the image to level.

if image_responses[0].source.name[0:5] == "front":

img = cv2.rotate(img, cv2.ROTATE_90_CLOCKWISE)

elif image_responses[0].source.name[0:5] == "right":

img = cv2.rotate(img, cv2.ROTATE_180)

# Don't overwrite an existing image

while True:

image_saved_path = os.path.join(options.folder, image_responses[0].source.name + '_{:0>4d}'.format(counter) + '.jpg')

counter += 1

if not os.path.exists(image_saved_path):

break

cv2.imwrite(image_saved_path, img)

print('Wrote: ' + image_saved_path)

# Wait for some time so we can drive the robot to a new position.

time.sleep(0.7)

We loop forever, or until we press Ctrl+C, capturing and saving images. Each time we:

- Capture the image with

get_image_from_sources - Unpack the image using numpy's

frombuffer - Optionally rotate the image, depending on which camera it is from (only the left and back cameras on Spot are upright).

- Make sure to not overwrite existing files.

- Use OpenCV to save it as a JPG.

Finally, finish up with:

return True

if __name__ == "__main__":

if not main(sys.argv[1:]):

sys.exit(1)

Running our code to capture images

Now that we have a script to capture images, let's run it (use Ctrl+C to stop capturing):

mkdir -p dogtoy/images

python3 capture_images.py 192.168.80.3 --image-source right_fisheye_image --folder dogtoy/images

Wrote: dogtoy/images/right_fisheye_image_0000.jpg

Wrote: dogtoy/images/right_fisheye_image_0001.jpg

Wrote: dogtoy/images/right_fisheye_image_0004.jpg

[...]

Wrote: dogtoy/images/right_fisheye_image_0076.jpg

Wrote: dogtoy/images/right_fisheye_image_0077.jpg

^C

First we make a directory to store our images. Then we run the code with parameters:

- Username (often

user) - Password

- IP address of the robot. Above, we've used the default IP when the robot is hosting its own access point

192.168.80.3. Yours might be different depending on how Spot is connected to your network. right_fisheye_imageto capture from the right camera- Store results in the

dogtoy/imagesfolder.

While capturing, drive the robot around to get a variety of viewpoints. You'll also want to run the script with a few different cameras to capture a diverse set of images.

Cameras:

frontleft_fisheye_imagefrontright_fisheye_imageleft_fisheye_imageright_fisheye_imageback_fisheye_image

Here's my result:

In this example, I've captured about 400 images. I probably should have put up a few more lights!

That's about the minimum you need to get something to work. If you want your ML model to work more reliably, capture more pictures in a wider variety of locations.

Label the Images

To label our images, we’ll use an open source off-the-shelf labeling program. There are many out there, but this one is simple and works great for our purposes.

Install labelImg

Use pip to install:

source my_spot_env/bin/activate

python3 -m pip install labelImg

[...]

Successfully installed PyQt5-sip-12.8.1 labelImg-1.8.4 pyqt5-5.15.2

Now run it:

labelImg

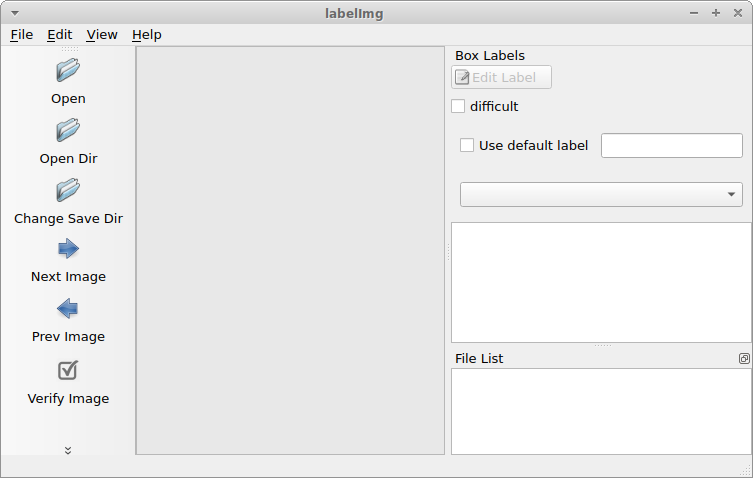

If it installed correctly, you'll see a window like this:

Open the image directory with File > Open Dir

The main keys we'll use are:

| d | Next image |

| a | Previous image |

| w | Create a box |

| del | Delete the selected box |

- Use

dto get to an image that has the dog toy in it. - Press

wthen click-and-drag to make a box on the toy. - Enter

dogtoyinto the pop-up and press OK.

Tip: If the box refuses to be a rectangle, press Ctrl to disable that mode.

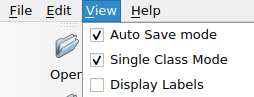

Before you label the rest of your images:

- Enable

View > Auto Save mode - Enable

View > Single Class Mode

- Press

dto go to the next image. - A save dialog will appear.

- Create a folder:

~/fetch/dogtoy/annotationsand save the labels to this location.

Verify the labels are saving

Let's make sure things are working before we spend a lot of time labeling.

Label a few more images with d and w then open your annotations folder in a file browser.

Your should have a directory structure like this:

dogtoy/

├── images

│ ├── right_fisheye_image_0000.jpg

│ ├── right_fisheye_image_0001.jpg

│ └── ...

└── annotations

├── right_fisheye_image_0000.xml

├── right_fisheye_image_0001.xml

└── ...

Open one and it should look something like this:

<annotation>

<folder>images</folder>

<filename>left_fisheye_image_0000.jpg</filename>

<path>/home/user/fetch/dogtoy/images/left_fisheye_image_0000.jpg</path>

<source>

<database>Unknown</database>

</source>

<size>

<width>480</width>

<height>640</height>

<depth>1</depth>

</size>

<segmented>0</segmented>

<object>

<name>dogtoy</name>

<pose>Unspecified</pose>

<truncated>0</truncated>

<difficult>0</difficult>

<bndbox>

<xmin>312</xmin>

<ymin>357</ymin>

<xmax>441</xmax>

<ymax>427</ymax>

</bndbox>

</object>

</annotation>

Note: The XML file stores the full path, so don't rearrange files after labeling.

Label Everything

Now that we are confident things are working, label the rest of the images.

Tip: A good rule of thumb if you're unsure about a label is to ask, "what do I want the network to do?"

For example, in one of my images I threw the dog-toy. Do I want the network to find flying dog toys? No, those aren't good for fetch. I should wait until it's on the ground. For that reason, I didn't label the flying dog toy.

Once you're done, you should have a large number of XML files which contain the labels. We are now ready to train the model!