<< Previous Page | Next Page >>

Fetch Part 5: Detecting People and Playing Fetch

Congratulations, you are one step away from a fully-functional, fetch-playing Spot! The last step is teaching Spot to detect people.

While we could train a new ML model, as we did in Part 2, instead we'll use an off-the-shelf model that is less work, and will likely yield better performance.

Loading an Off-the-Shelf Model

We're going to use the same model we used for transfer learning. This has a number of advantages:

- It has sufficient person-detection performance.

- The structure is the same, so we won't have to change how we call the model.

- Bonus: You already have it!

Copy the labels file into the model directory:

cd ~/fetch

cp models-with-protos/research/object_detection/data/mscoco_label_map.pbtxt dogtoy/pre-trained-models/ssd_resnet50_v1_fpn_640x640_coco17_tpu-8/

To load the model, call network_compute_server.py with an additional -m argument.

- If you are still running

network_compute_server.pystop it with^C

Restart it with the new model and its labels:

cd ~/fetch

python3 network_compute_server.py -m dogtoy/exported-models/dogtoy-model/saved_model dogtoy/annotations/label_map.pbtxt -m dogtoy/pre-trained-models/ssd_resnet50_v1_fpn_640x640_coco17_tpu-8/saved_model dogtoy/pre-trained-models/ssd_resnet50_v1_fpn_640x640_coco17_tpu-8/mscoco_label_map.pbtxt 192.168.80.3

python3 network_compute_server.pycalls our script.-m dogtoy/exported-models/dogtoy-model/saved_model dogtoy/annotations/label_map.pbtxtis the custom model and its labels that we ran before.-m dogtoy/pre-trained-models/ssd_resnet50_v1_fpn_640x640_coco17_tpu-8/saved_model dogtoy/pre-trained-models/ssd_resnet50_v1_fpn_640x640_coco17_tpu-8/mscoco_label_map.pbtxtis the pre-trained model and its labels.192.168.80.3is the robot's and IP address- This command assumes that the `BOSDYN_CLIENT_USERNAME` and `BOSDYN_CLIENT_PASSWORD` environment variables are set to the robot's username and password.

Upon success, you'll see a bunch of output as TensorFlow and CUDA start up. Eventually, you'll see indication that both models have loaded, as shown below:

[... lots of text ...]

Service fetch_server running on port: 50051

Loaded models:

dogtoy-model

ssd_resnet50_v1_fpn_640x640_coco17_tpu-8

Testing Person Detection

With the new model running, we can go back to the tablet and try it out.

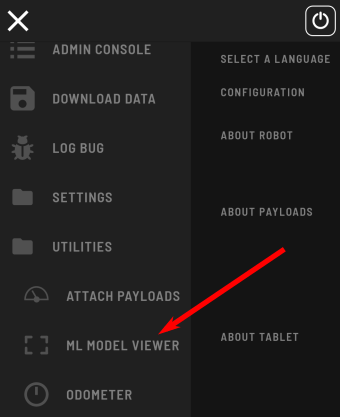

1. Select Hamburger Menu > Utilities > ML Model Viewer

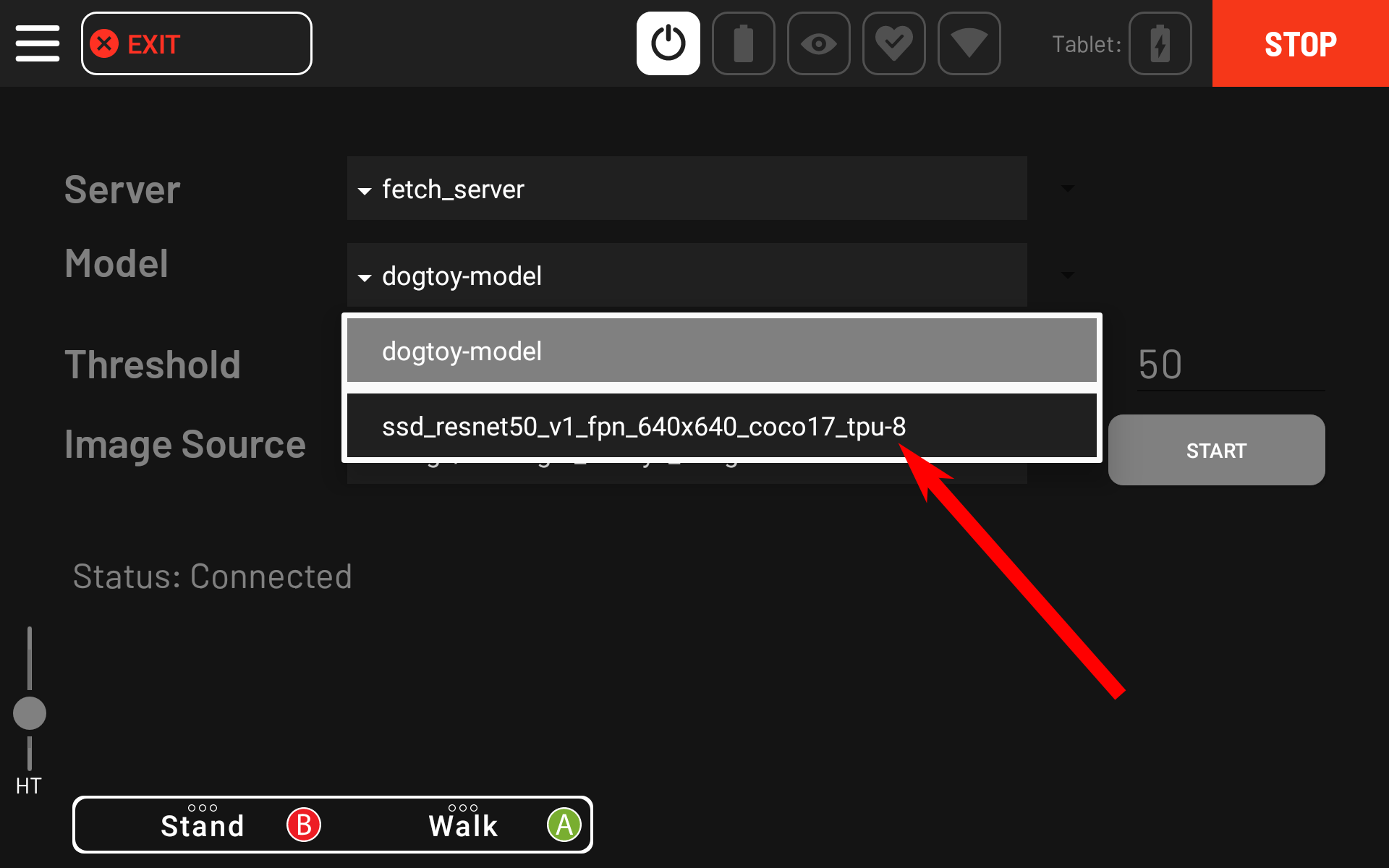

2. Select ssd_resnet50_v1_fpn_640x640_coco17_tpu-8 model and press Start.

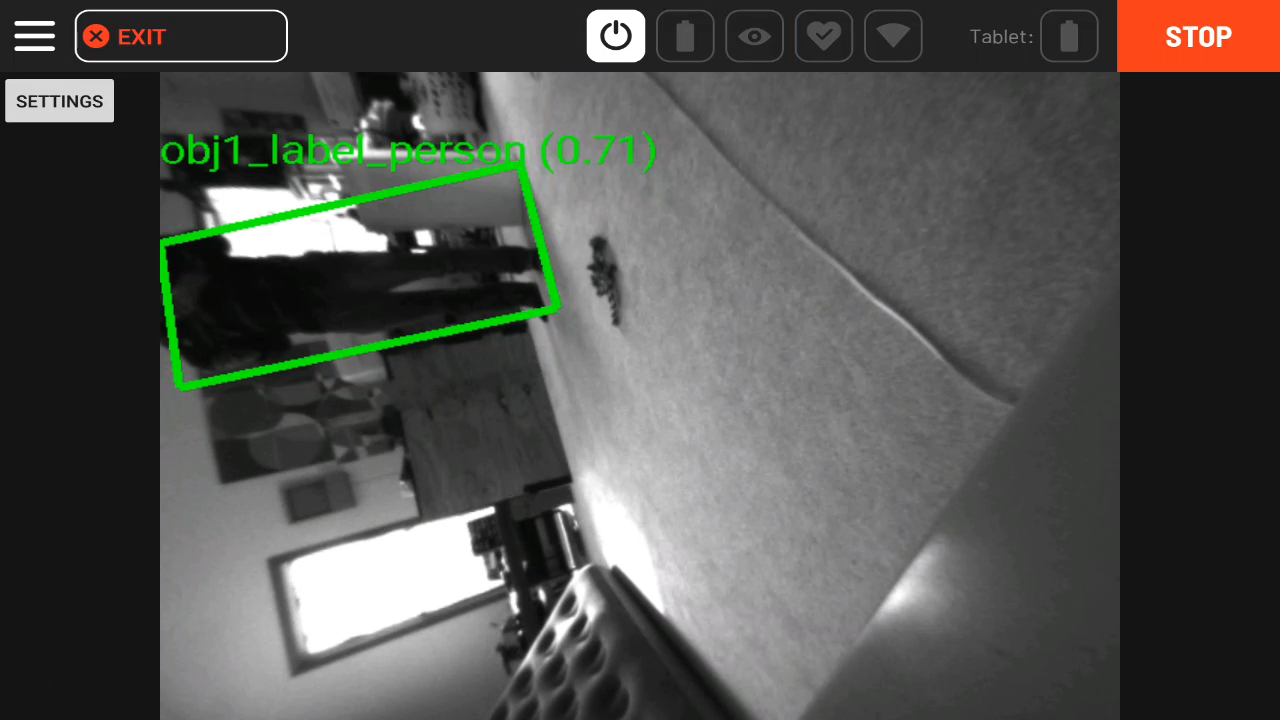

Upon success, you'll see bounding boxes around people in the video. Make sure to stay 2 meters away from the robot.

For the gif lovers out there:

Putting it All Together

We now have all the required pieces to fetch:

- Dog-toy detection

- Grasping of the toy

- Person detection

To pull it together, we'll add to our fetch.py and use the person detection to play fetch!

- (or download: fetch.py)

First, delete the following lines near the end of fetch.py

# For now, we'll just exit...

print('')

print('Done for now, returning control to tablet in 5 seconds...')

time.sleep(5.0)

break

Add new code to replace it directly after these lines:

# Wait for the carry command to finish

time.sleep(0.75) person = None

while person is None:

# Find a person to deliver the toy to

person, image, vision_tform_person = get_obj_and_img(

network_compute_client, options.ml_service,

options.person_model, options.confidence_person, kImageSources,

'person')

Same call as above, but this time we use model options.person_model and search for objects with the 'person' label.

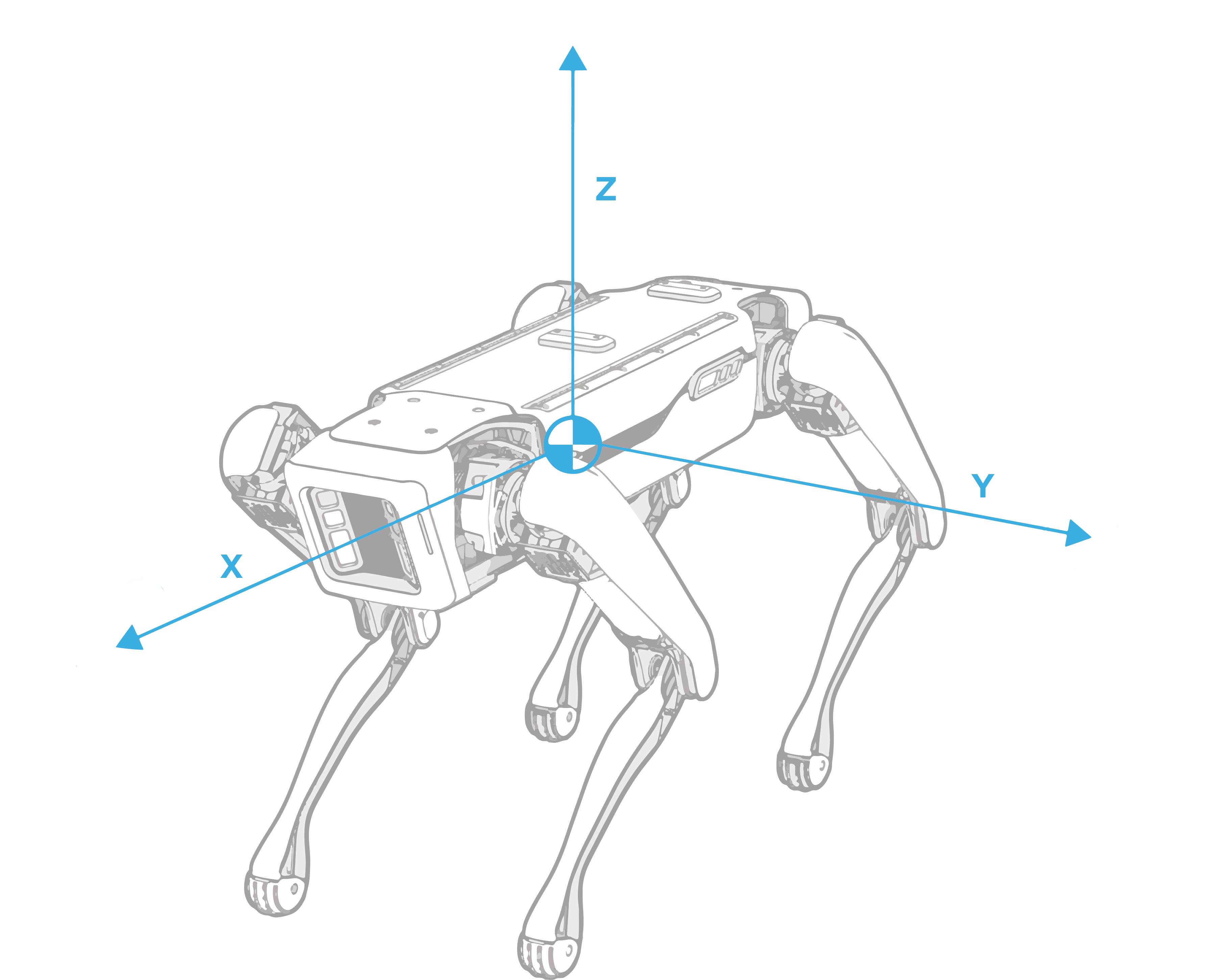

- If you're new to Spot's frame conventions, take a look at our [concept documentation](../../concepts/geometry_and_frames.html#transformations-between-spot-s-frames).

# We now have found a person to drop the toy off near.

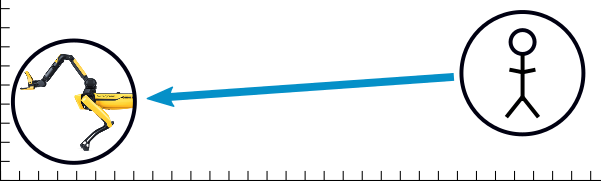

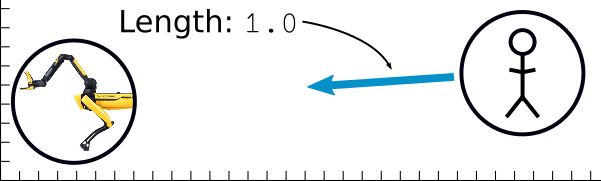

drop_position_rt_vision, heading_rt_vision = compute_stand_location_and_yaw(

vision_tform_person, robot_state_client, distance_margin=2.0)

wait_position_rt_vision, wait_heading_rt_vision = compute_stand_location_and_yaw(

vision_tform_person, robot_state_client, distance_margin=3.0)

Compute the position we'll stand to drop the toy and the position we'll stand to back up and wait. We'll fill in this function below.

# Tell the robot to go there

# Limit the speed so we don't charge at the person.

se2_pose = geometry_pb2.SE2Pose(

position=geometry_pb2.Vec2(x=drop_position_rt_vision[0],

y=drop_position_rt_vision[1]), angle=heading_rt_vision)

move_cmd = RobotCommandBuilder.synchro_se2_trajectory_command(

se2_pose,

frame_name=frame_helpers.VISION_FRAME_NAME,

params=get_walking_params(0.5, 0.5))

end_time = 5.0

cmd_id = command_client.robot_command(command=move_cmd,

end_time_secs=time.time() +

end_time)

- Set a velocity limit when walking

- Command the robot to move to our drop position and yaw

# Wait until the robot reports that it is at the goal.

block_for_trajectory_cmd(command_client, cmd_id, timeout_sec=5, verbose=True)

print('Arrived at goal, dropping object...')

Wait for the robot to walk. This helper function polls the robot for feedback using cmd_id and returns when we've arrived.

# Do an arm-move to gently put the object down.

# Build a position to move the arm to (in meters, relative to and expressed in the gravity aligned body frame).

x = 0.75

y = 0

z = -0.25

hand_ewrt_flat_body = geometry_pb2.Vec3(x=x, y=y, z=z)

# Point the hand straight down with a quaternion.

qw = 0.707

qx = 0

qy = 0.707

qz = 0

flat_body_Q_hand = geometry_pb2.Quaternion(w=qw, x=qx, y=qy, z=qz)

flat_body_tform_hand = geometry_pb2.SE3Pose(

position=hand_ewrt_flat_body, rotation=flat_body_Q_hand)

robot_state = robot_state_client.get_robot_state()

vision_tform_flat_body = frame_helpers.get_a_tform_b(

robot_state.kinematic_state.transforms_snapshot,

frame_helpers.VISION_FRAME_NAME,

frame_helpers.GRAV_ALIGNED_BODY_FRAME_NAME)

vision_tform_hand_at_drop = vision_tform_flat_body * math_helpers.SE3Pose.from_obj(

flat_body_tform_hand)

Compute the drop position. Since we're already standing in the right location, we can do a body-relative move to point the hand down in front of Spot.

Note: We convert to the vision frame using vision_tform_flat_body * ... because we don't want the robot to move relative to its body (we want a fixed point in the world).

Often this won't matter, but if the robot is disturbed, it will keep the arm in place, as opposed to holding the arm relative to the robot

# duration in seconds

seconds = 1

arm_command = RobotCommandBuilder.arm_pose_command(

vision_tform_hand_at_drop.x, vision_tform_hand_at_drop.y,

vision_tform_hand_at_drop.z, vision_tform_hand_at_drop.rot.w,

vision_tform_hand_at_drop.rot.x, vision_tform_hand_at_drop.rot.y,

vision_tform_hand_at_drop.rot.z, frame_helpers.VISION_FRAME_NAME,

seconds)

# Keep the gripper closed.

gripper_command = RobotCommandBuilder.claw_gripper_open_fraction_command(

0.0)

# Combine the arm and gripper commands into one RobotCommand

command = RobotCommandBuilder.build_synchro_command(

gripper_command, arm_command)

# Send the request

cmd_id = command_client.robot_command(command)

Build and send the arm command. We use a [synchronized command](../../concepts/arm/arm_concepts.md) to command the arm and the gripper. We don't pass anything for Spot's body to do, so it will continue to stand.

# Wait until the arm arrives at the goal.

block_until_arm_arrives(command_client, cmd_id)

# Open the gripper

gripper_command = RobotCommandBuilder.claw_gripper_open_fraction_command(

1.0)

command = RobotCommandBuilder.build_synchro_command(gripper_command)

cmd_id = command_client.robot_command(command)

# Wait for the dogtoy to fall out

time.sleep(1.5)

# Stow the arm.

stow_cmd = RobotCommandBuilder.arm_stow_command()

command_client.robot_command(stow_cmd)

time.sleep(1)

- Wait until the arm is in position for the drop

- Open the gripper

- Stow the arm

print('Backing up and waiting...')

# Back up one meter and wait for the person to throw the object again.

se2_pose = geometry_pb2.SE2Pose(

position=geometry_pb2.Vec2(x=wait_position_rt_vision[0],

y=wait_position_rt_vision[1]),

angle=wait_heading_rt_vision)

move_cmd = RobotCommandBuilder.synchro_se2_trajectory_command(

se2_pose,

frame_name=frame_helpers.VISION_FRAME_NAME,

params=get_walking_params(0.5, 0.5))

end_time = 5.0

cmd_id = command_client.robot_command(command=move_cmd,

end_time_secs=time.time() +

end_time)

# Wait until the robot reports that it is at the goal.

block_for_trajectory_cmd(command_client, cmd_id, timeout_sec=5, verbose=True)

The last part! Back up to let the user pick up the toy safely and loop.

Now we'll define some of the helper functions:

def compute_stand_location_and_yaw(vision_tform_target, robot_state_client,

distance_margin):

# Compute drop-off location:

# Draw a line from Spot to the person

# Back up 2.0 meters on that line

vision_tform_robot = frame_helpers.get_a_tform_b(

robot_state_client.get_robot_state(

).kinematic_state.transforms_snapshot, frame_helpers.VISION_FRAME_NAME,

frame_helpers.GRAV_ALIGNED_BODY_FRAME_NAME)

This function computes where to stand for the toy drop-off. It takes in:

- Target position

- Client to get robot state

- Offset distance

What we'll do is:

- Draw a line from the target position to our current position.

- Ignore that line's length.

- Move in the direction of the line, from the target, until we're

2.0meters along it.

# Compute vector between robot and person

robot_rt_person_ewrt_vision = [

vision_tform_robot.x - vision_tform_target.x,

vision_tform_robot.y - vision_tform_target.y,

vision_tform_robot.z - vision_tform_target.z

]

The subtraction of these two vectors computes the vector between the positions.

# Compute the unit vector.

if np.linalg.norm(robot_rt_person_ewrt_vision) < 0.01:

robot_rt_person_ewrt_vision_hat = vision_tform_robot.transform_point(1, 0, 0)

else:

robot_rt_person_ewrt_vision_hat = robot_rt_person_ewrt_vision / np.linalg.norm(

robot_rt_person_ewrt_vision)

We don't care how long the vector is, so we'll change it to be 1.0 meters (computing a unit vector).

- Note that we guard for a division by zero using the robot's current direction

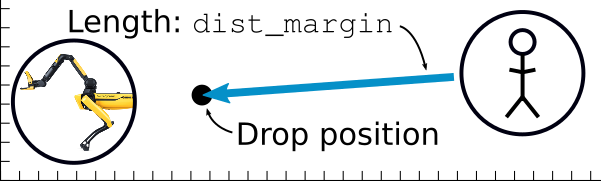

# Starting at the person, back up meters along the unit vector.

drop_position_rt_vision = [

vision_tform_target.x +

robot_rt_person_ewrt_vision_hat[0] * distance_margin,

vision_tform_target.y +

robot_rt_person_ewrt_vision_hat[1] * distance_margin,

vision_tform_target.z +

robot_rt_person_ewrt_vision_hat[2] * distance_margin

]

Now that we have a vector that is exactly 1.0 in length, we can multiply it by whatever length we want and get our drop position.

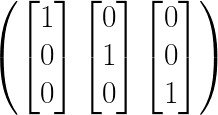

# We also want to compute a rotation (yaw) so that we will face the person when dropping.

# We'll do this by computing a rotation matrix with X along

# -robot_rt_person_ewrt_vision_hat (pointing from the robot to the person) and Z straight up:

xhat = -robot_rt_person_ewrt_vision_hat

zhat = [0.0, 0.0, 1.0]

yhat = np.cross(zhat, xhat)

mat = np.matrix([xhat, yhat, zhat]).transpose()

heading_rt_vision = math_helpers.Quat.from_matrix(mat).to_yaw()

return drop_position_rt_vision, heading_rt_vision

We want the robot to be facing the person when it drops off the dog-toy. We'll do this by constructing a rotation matrix and extracting the yaw from that.

Recall linear algebra, where we can construct a rotation matrix from three orthogonal vectors:

With numbers:

We can construct a new rotation matrix where xhat is the direction we want the robot to face.

We've already computed a unit vector, robot_rt_person_ewrt_vision_hat, that points from the person to the robot. If we negate that vector, it will point from the robot to the person:

xhat = -robot_rt_person_ewrt_vision_hat

For zhat, we'll use the gravity vector: [0, 0, 1].

Visually:

yhat is easy since it is always orthogonal to xhat and zhat, so we can find it using the cross product.

- With

xhat,yhat, andzhat, we can construct a rotation matrix: mat = np.matrix([xhat, yhat, zhat]).transpose()- We can then easily convert from a rotation matrix through a quaternion, to a yaw.

heading_rt_vision = math_helpers.Quat.from_matrix(mat).to_yaw()

def pose_dist(pose1, pose2):

diff_vec = [pose1.x - pose2.x, pose1.y - pose2.y, pose1.z - pose2.z]

return np.linalg.norm(diff_vec)

Compute the distance between two frames:

def get_walking_params(max_linear_vel, max_rotation_vel):

max_vel_linear = geometry_pb2.Vec2(x=max_linear_vel, y=max_linear_vel)

max_vel_se2 = geometry_pb2.SE2Velocity(linear=max_vel_linear,

angular=max_rotation_vel)

vel_limit = geometry_pb2.SE2VelocityLimit(max_vel=max_vel_se2)

params = RobotCommandBuilder.mobility_params()

params.vel_limit.CopyFrom(vel_limit)

return params

Set up Spot's mobility parameters to limit walking speed.

Enable longer-distance walking for Pickup

In the previous section, we had a part that was commented out, starting with:

# NOTE: we'll enable this code in Part 5, when we understand it.- This is essentially the same as what we do for walking up to a person above. Uncomment this section to allow the robot to walk further to the dogtoy.

Playing Fetch

Run the code:

python3 fetch.py -s fetch-server -m dogtoy-model -p ssd_resnet50_v1_fpn_640x640_coco17_tpu-8 192.168.80.3Arguments are:

- Name of our ML server

- Name of the dog-toy model

- Name of our person detection model

- IP address of the robot

Upon success, the robot should pick up the toy, bring it to you, drop it, and wait for you to throw it again!

Once fetch is running from your laptop, you can move the model to run on the robot in Core IO, try it out in Part 6!

Head over to Part 6: Running the model on Core IO >>

<< Previous Page | Next Page >>